+86 (0)10 6276-1084

hewang

Room 106-1, Courtyard No.5, Jingyuan

Embodied AI, 3D Computer Vision, Robotics https://hughw19.github.io/Bio-Sketch

Dr. He Wang is a tenure-track assistant professor in the Center on Frontiers of Computing Studies (CFCS) at Peking University. He founds and leads Embodied Perception and InteraCtion (EPIC) Lab (homepage: https://hughw19.github.io) with the mission of developing generalizable skills and embodied multimodal large model for robots to facilitate embodied AGI. He is also the director of PKU-Galbot joint lab of embodied AI and BAAI center of embodied AI. He has published more than 50 papers in top conferences and journals of computer vision, robotics, and learning, including CVPR/ICCV/ECCV/TRO/ICRA/IROS/NeurIPS/ICLR/AAAI. His pioneering work on category-level 6D pose estimation, NOCS, receives the 2022 World Artificial Intelligence Conference Youth Outstanding Paper (WAICYOP) Award and his work also receives ICCV 2023 best paper finalist, ICRA 2023 outstanding manipulation paper award finalist and Eurographics 2019 best paper honorable mention. He serves as an associate editor of Image and Vision Computing and serves as an area chair in CVPR 2022 and WACV 2022. Prior to joining Peking University, he received his Ph.D. degree from Stanford University in 2021 under the advisory of Prof. Leonidas J. Guibas and his Bachelor's degree from Tsinghua University in 2014.

Publications

Selected Publications

*: equivalent contribution, †: corresponding author

Published Works

|

|

ASRO-DIO: Active Subspace Random Optimization Based Depth Inertial Odometry

|

|

|

Domain Randomization-Enhanced Depth Simulation and Restoration for Perceiving and Grasping Specular and Transparent Objects

|

|

|

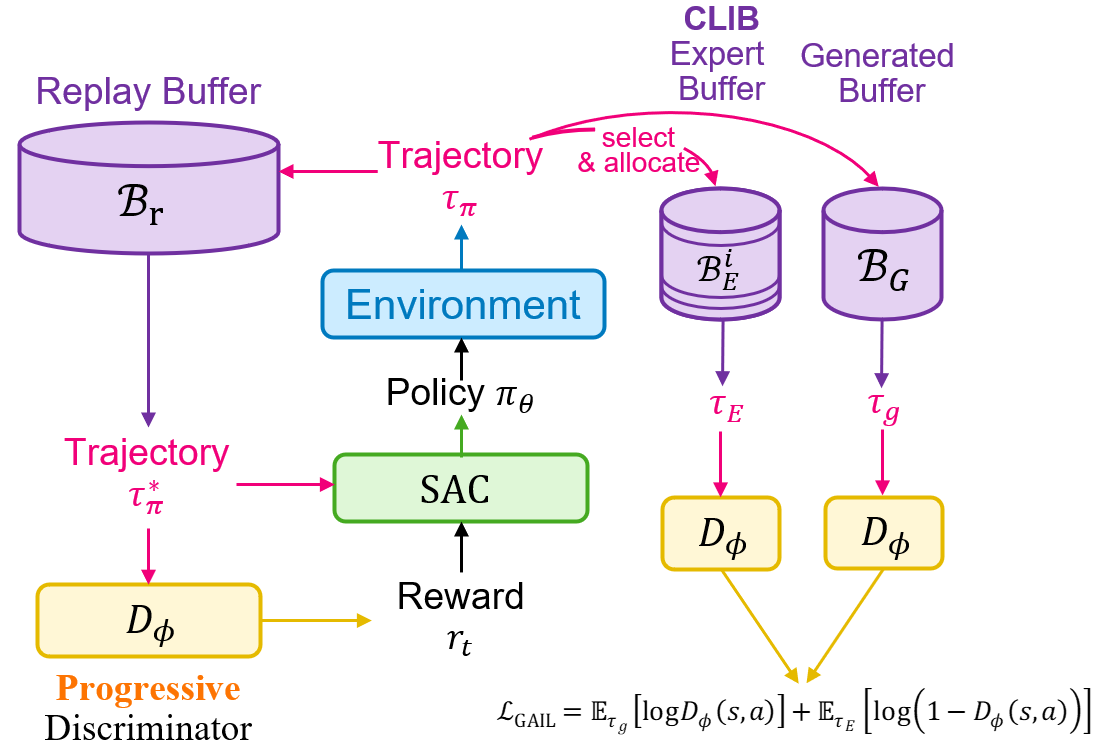

Learning Category-Level Generalizable Object Manipulation Policy via Generative Adversarial Self-Imitation Learning from Demonstrations

|

|

|

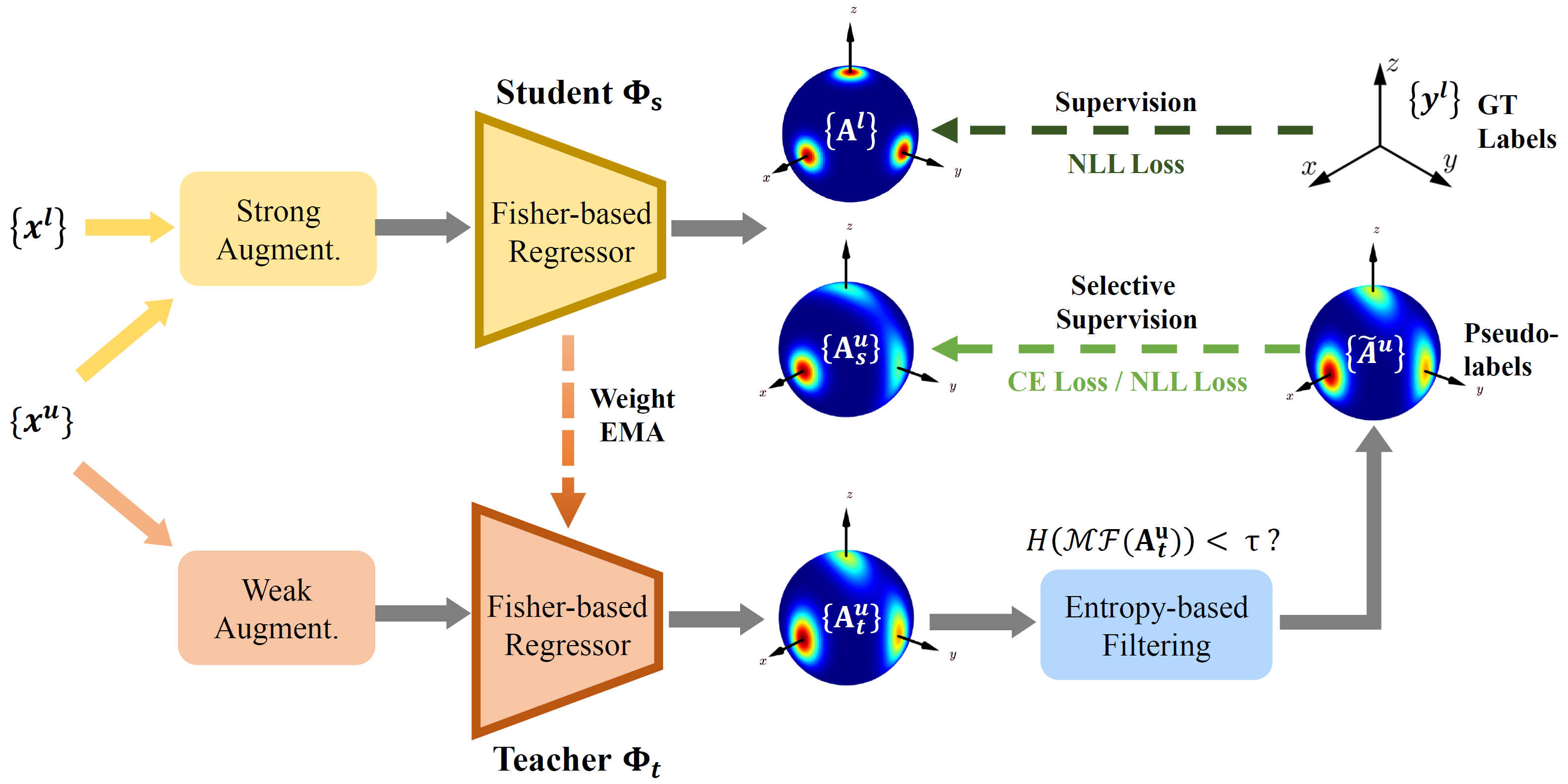

FisherMatch: Semi-Supervised Rotation Regression via Entropy-based Filtering

|

|

|

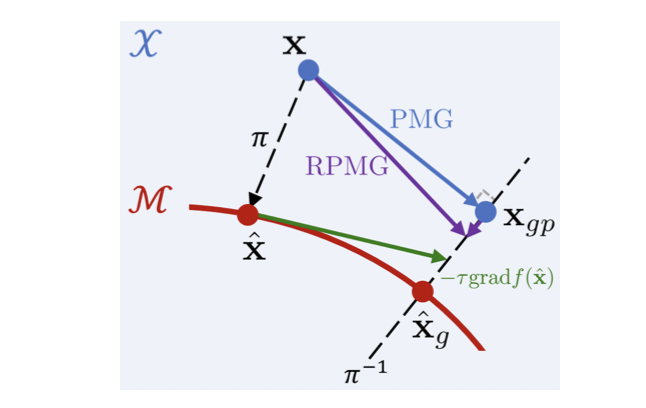

Projective Manifold Gradient Layer for Deep Rotation Regression

|

|

|

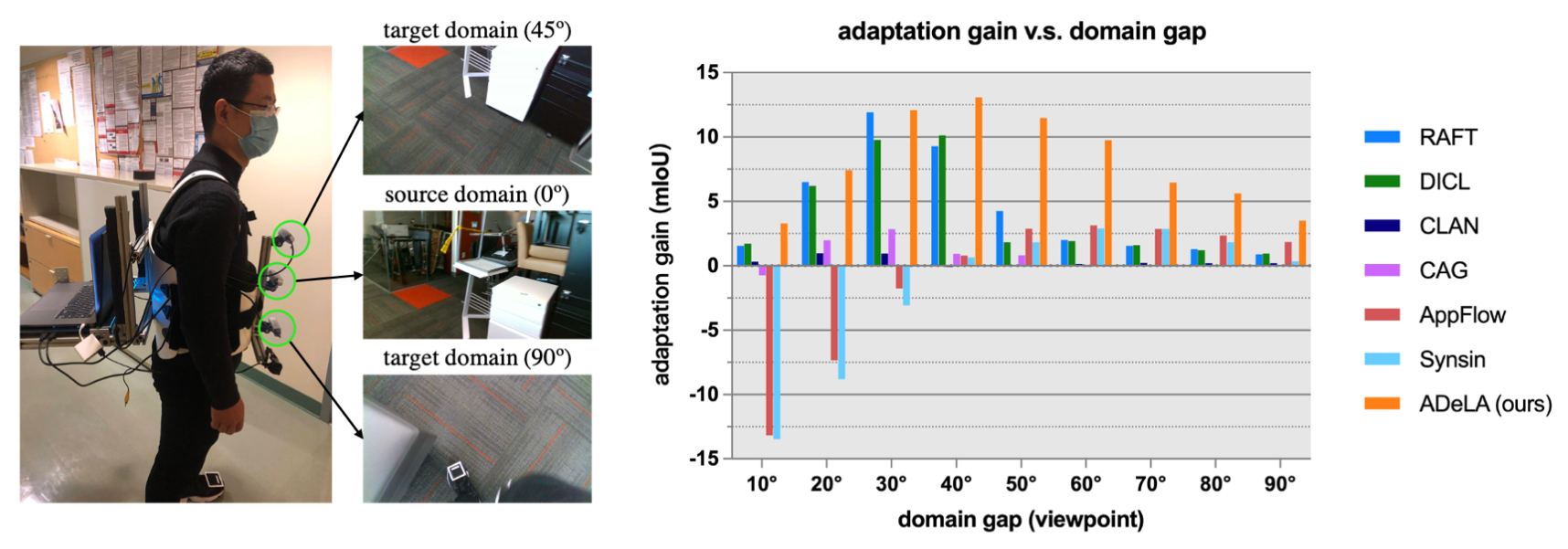

ADeLA: Automatic Dense Labeling with Attention for Viewpoint Adaptation in Semantic Segmentation

|

|

|

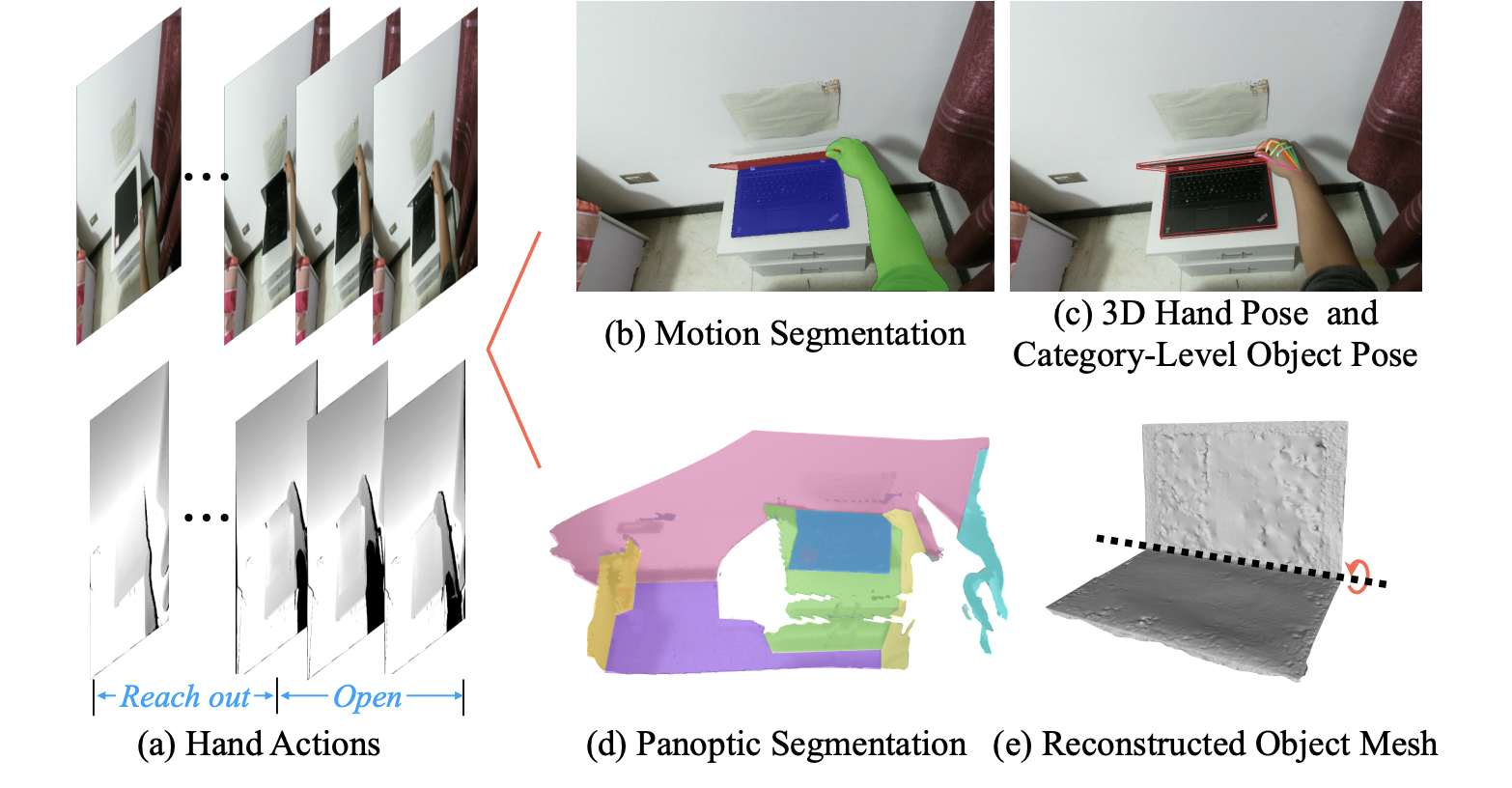

HOI4D: A 4D Egocentric Dataset for Category-Level Human-Object Interaction

|

|

|

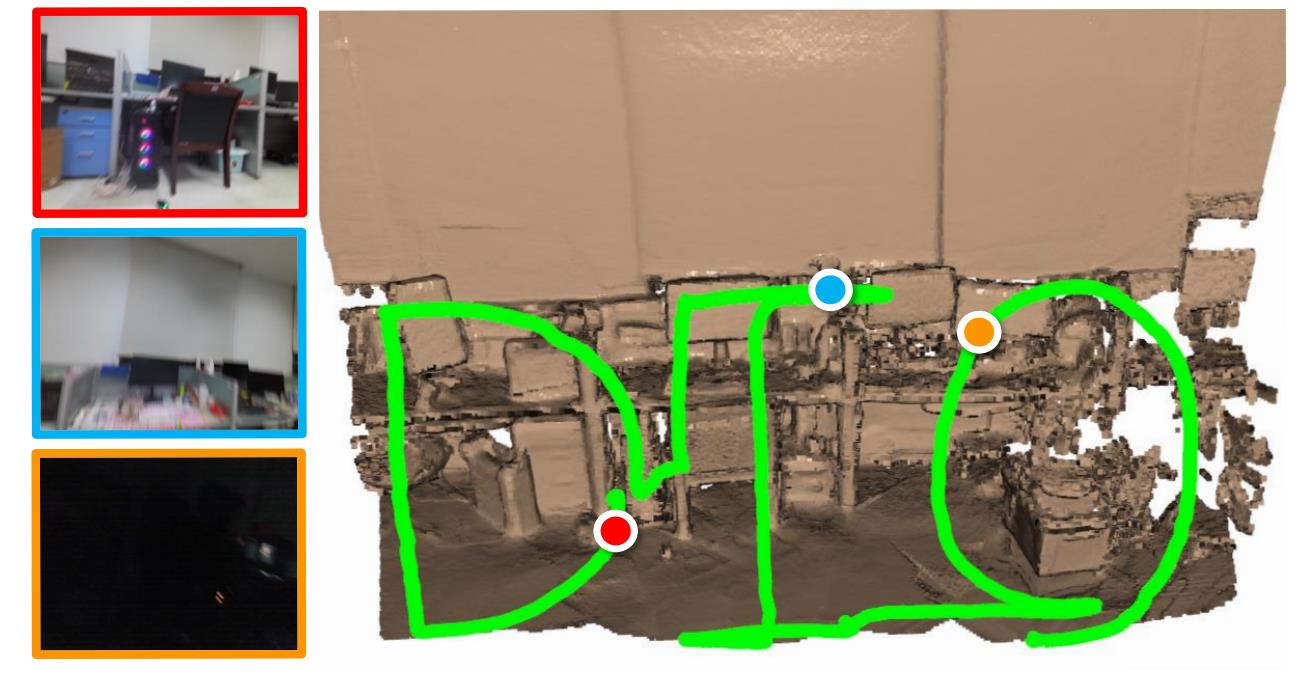

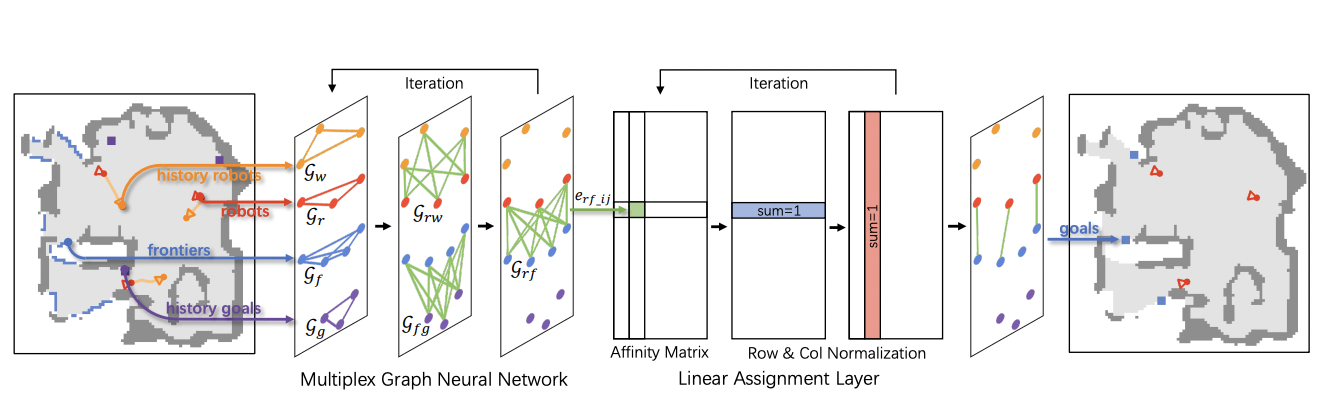

Multi-Robot Active Mapping via Neural Bipartite Graph Matching

|

|

|

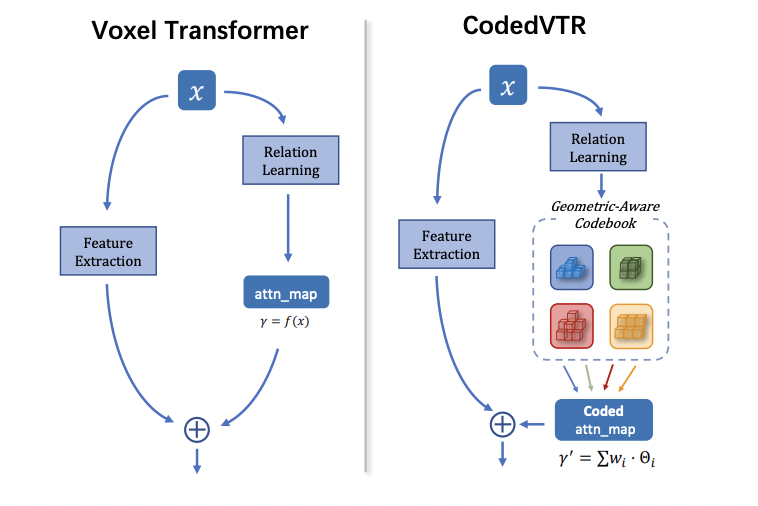

CodedVTR: Codebook-based Sparse Voxel Transformer with Geometric Guidance

|

|

|

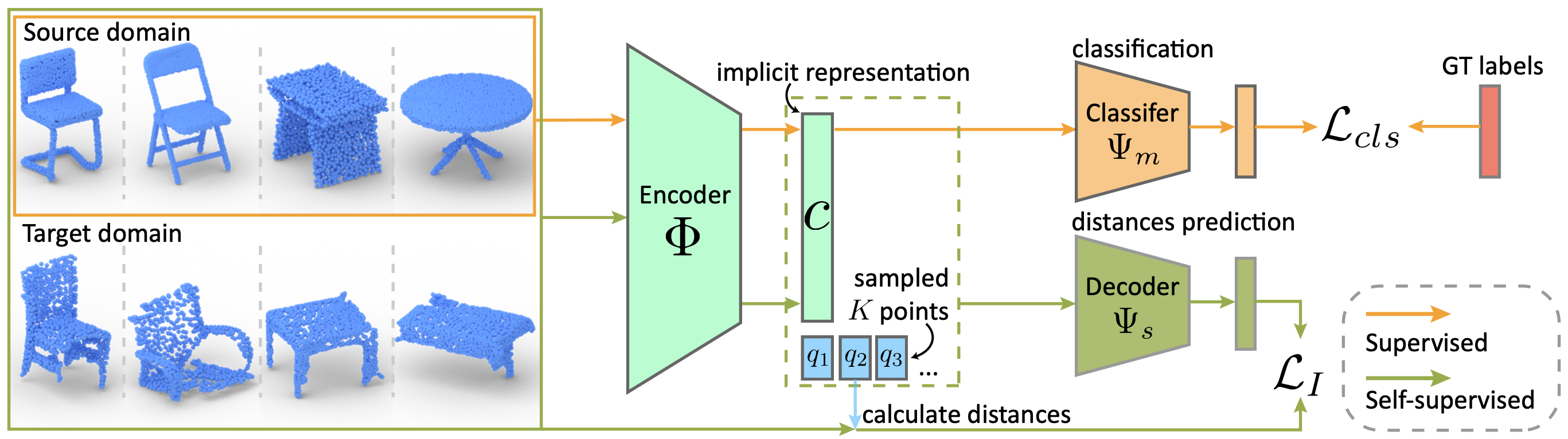

Domain Adaptation on Point Clouds via Geometry-Aware Implicits

|

|

|

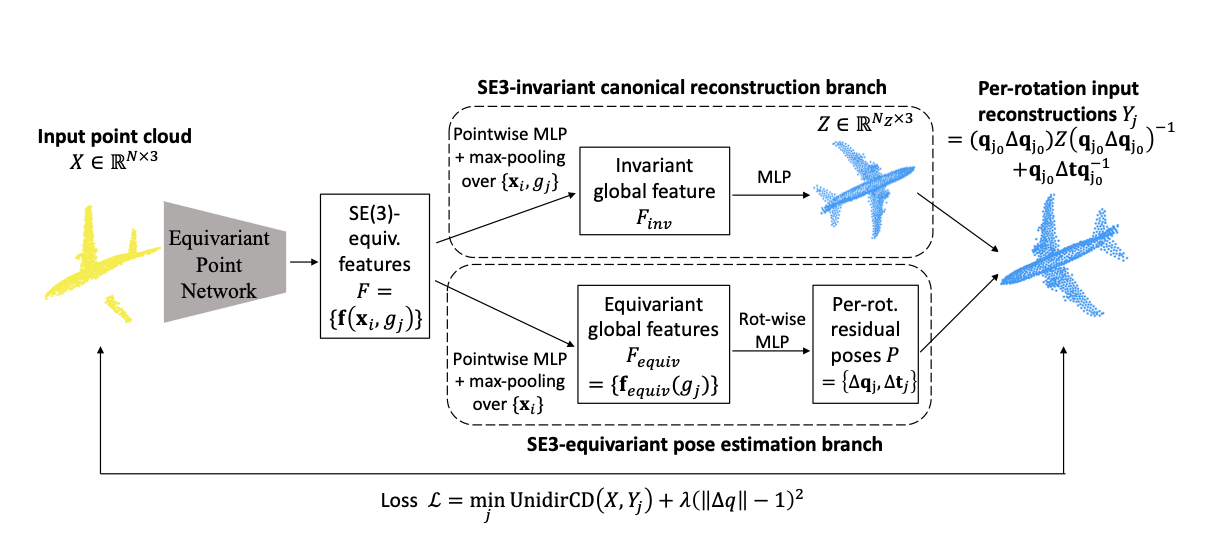

Leveraging SE(3) Equivariance for Self-supervised Category-Level Object Pose Estimation from Point Clouds

|

|

|

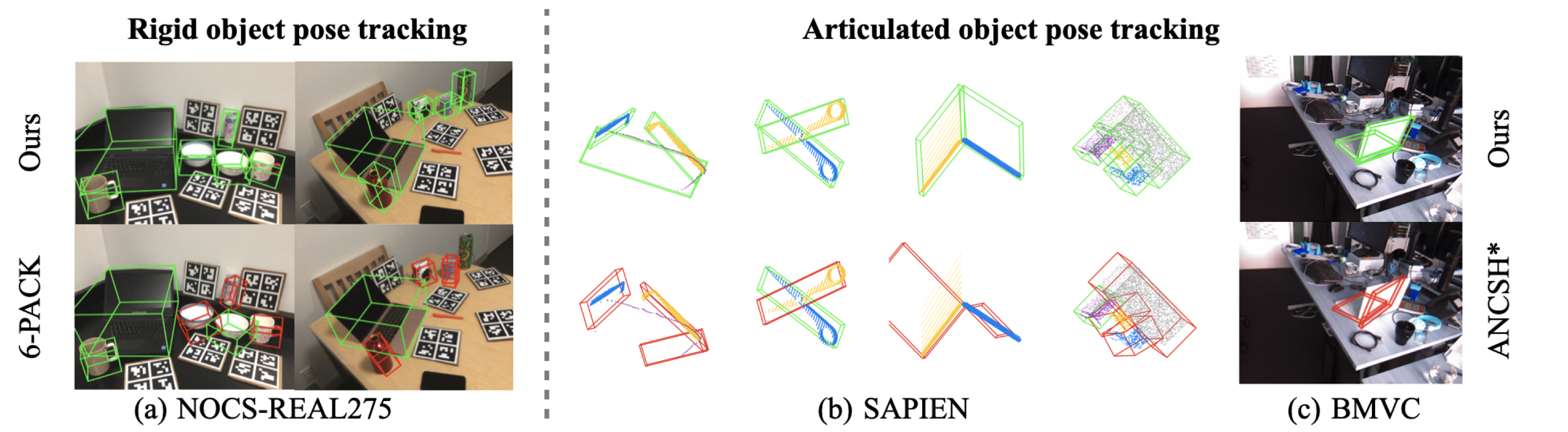

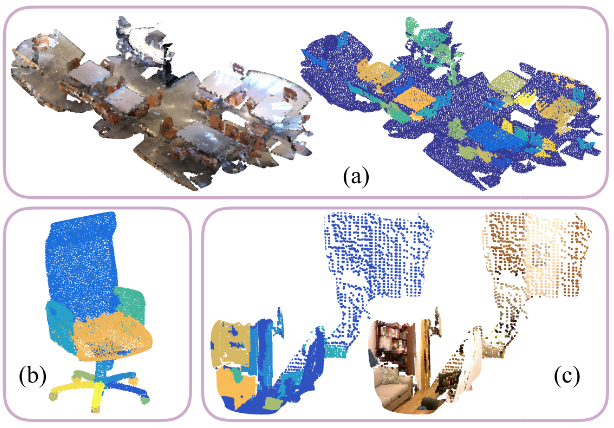

CAPTRA: CAtegory-level Pose Tracking for Rigid and Articulated Objects from Point Clouds

|

|

|

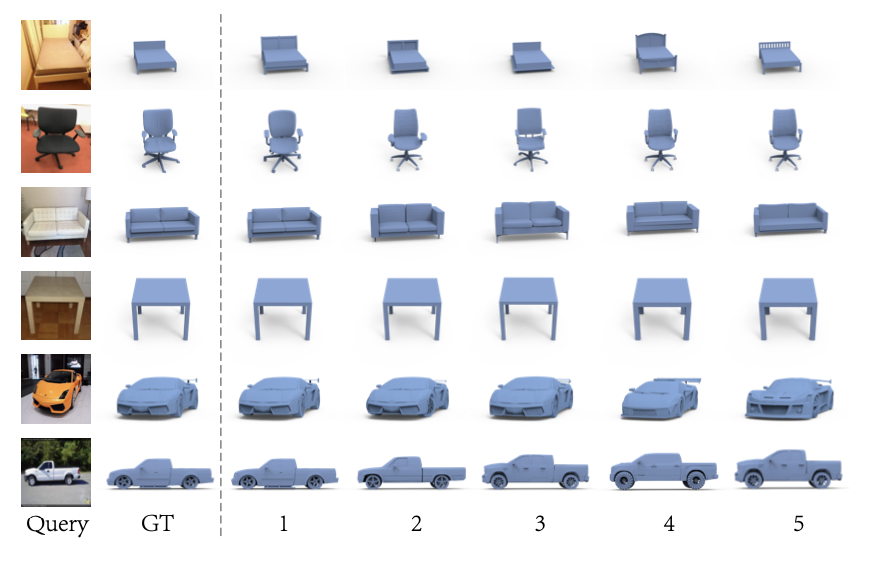

Single Image 3D Shape Retrieval via Cross-Modal Instance and Category Contrastive Learning

|

|

|

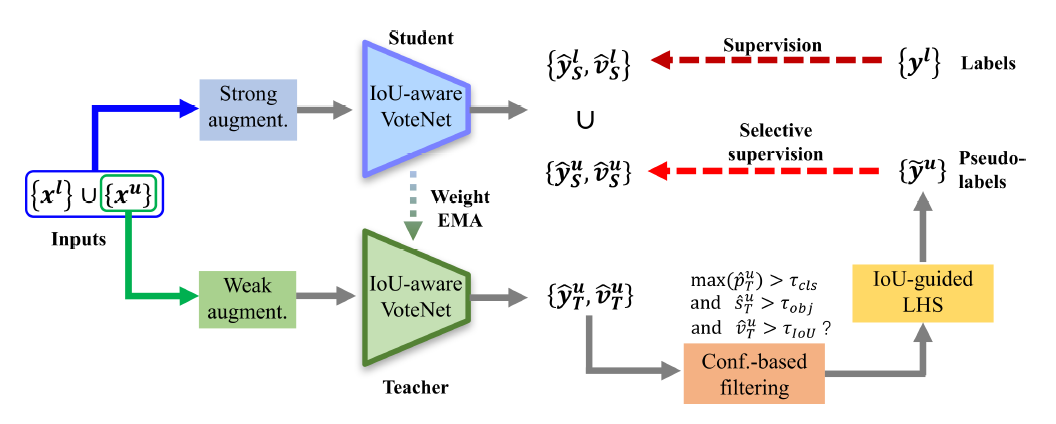

3DIoUMatch: Leveraging IoU Prediction for Semi-Supervised 3D Object Detection

|

|

|

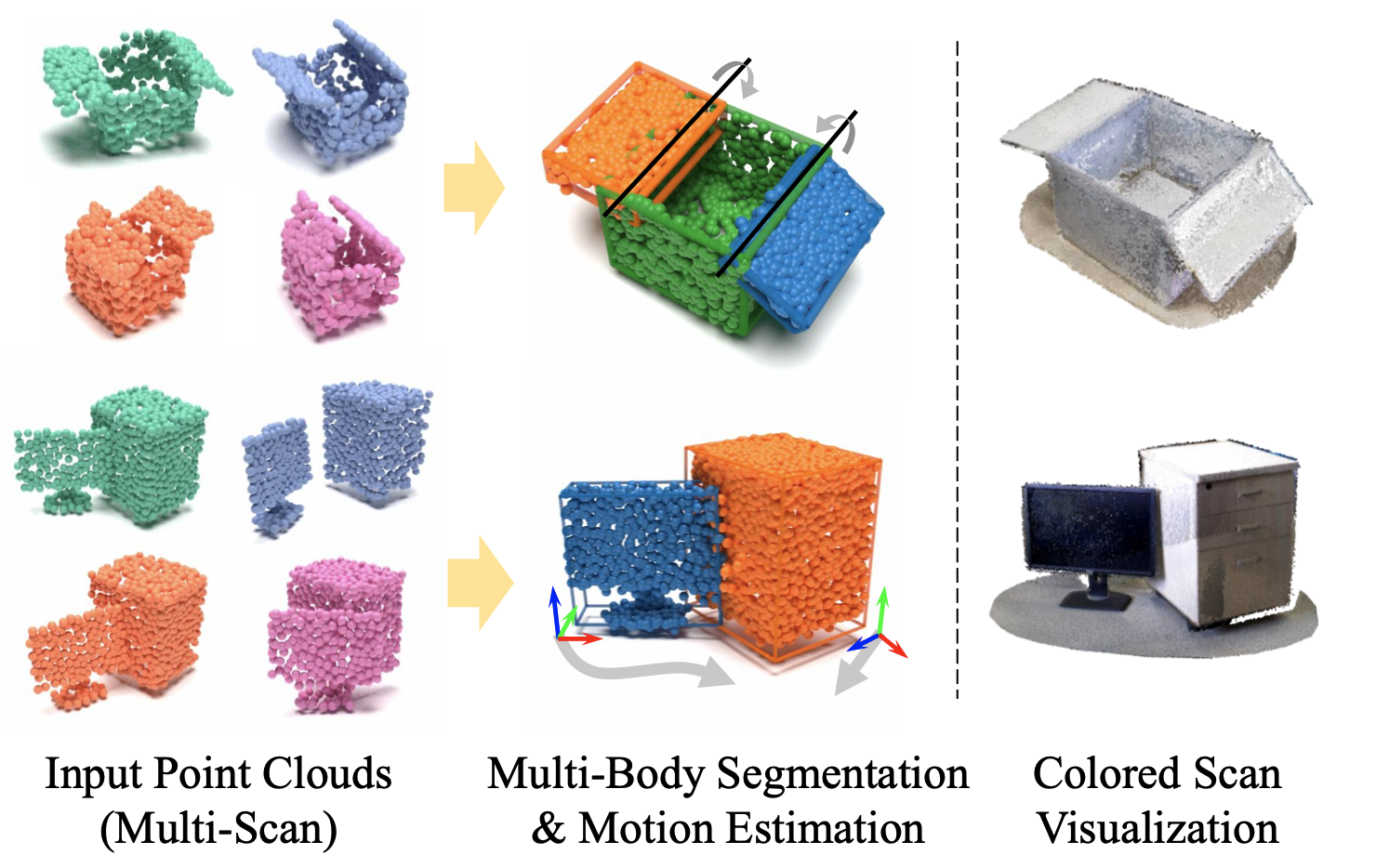

MultiBodySync: Multi-Body Segmentation and Motion Estimation via 3D Scan Synchronization

|

|

|

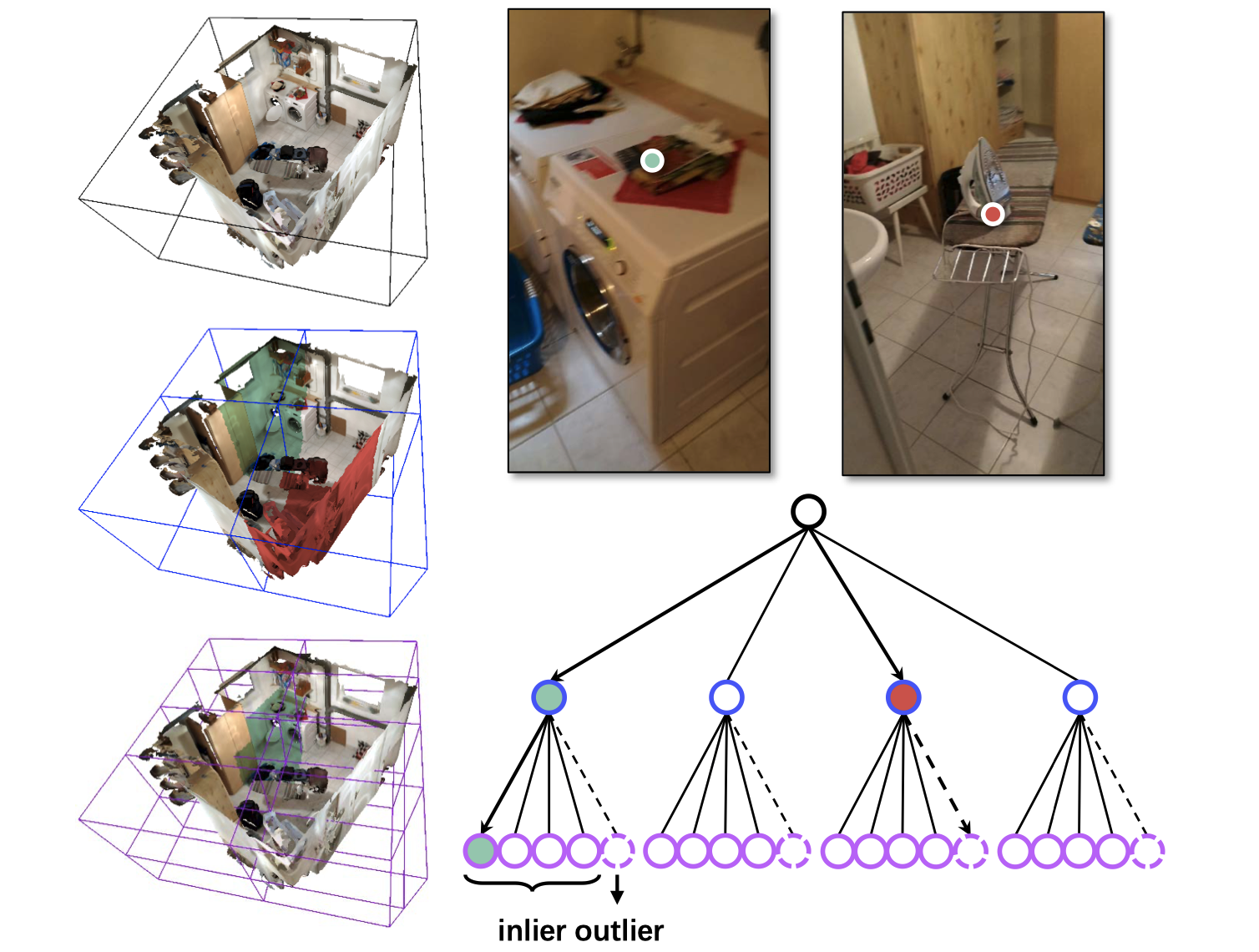

Robust Neural Routing Through Space Partitions for Camera Relocalization in Dynamic Indoor Environments

|

|

|

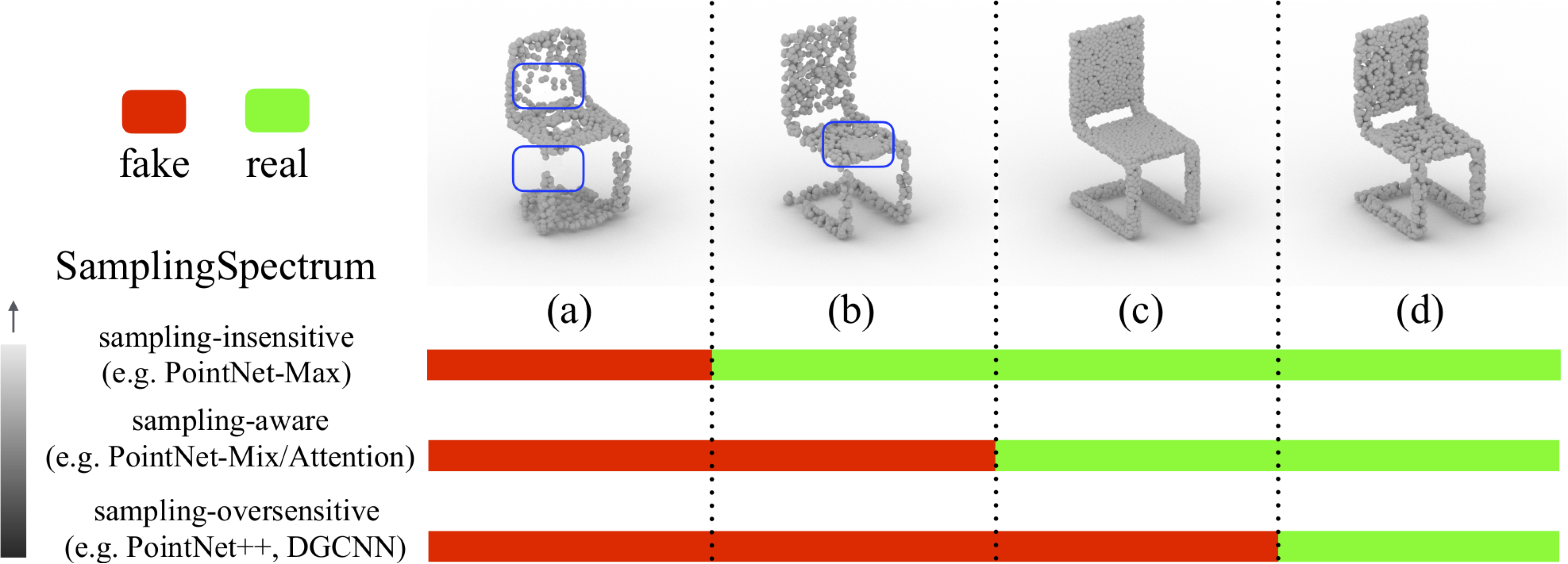

Rethinking Sampling in 3D Point Cloud Generative Adversarial Networks

|

|

|

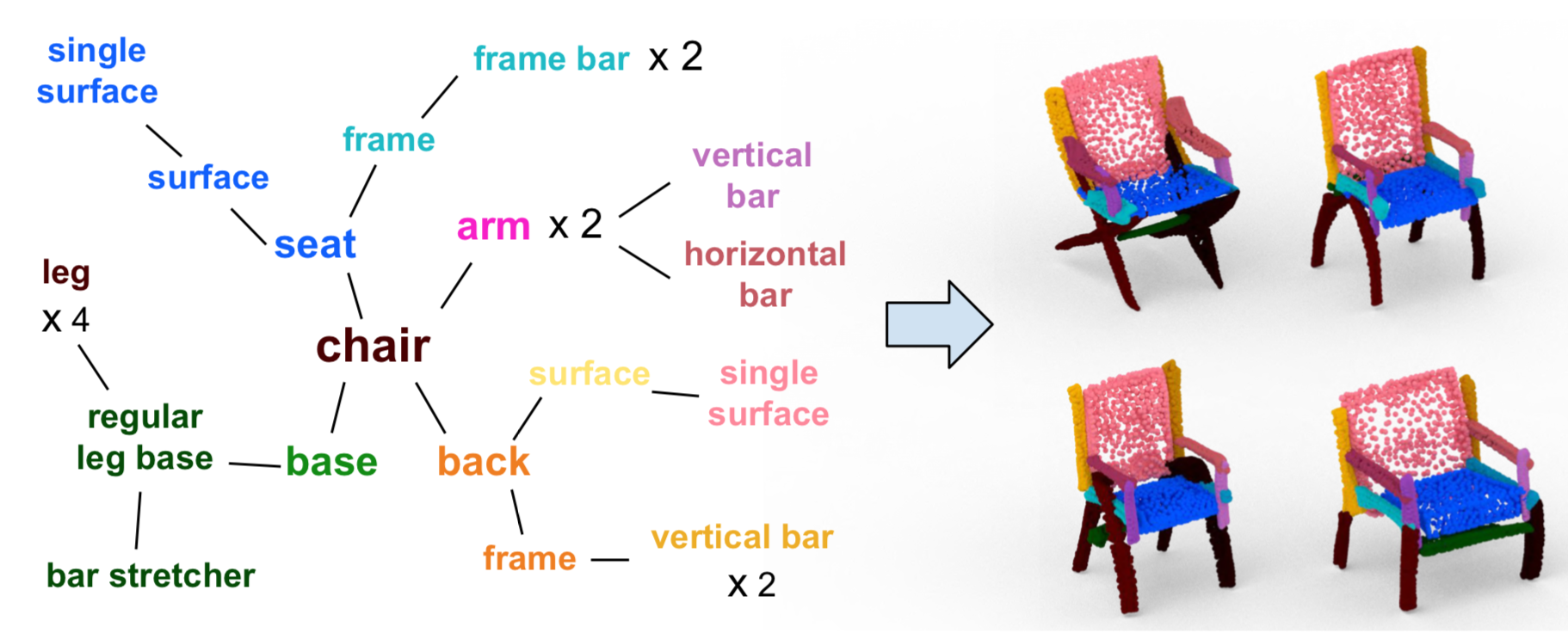

PT2PC: Learning to Generate 3D Point Cloud Shapes from Part Tree Conditions

|

|

|

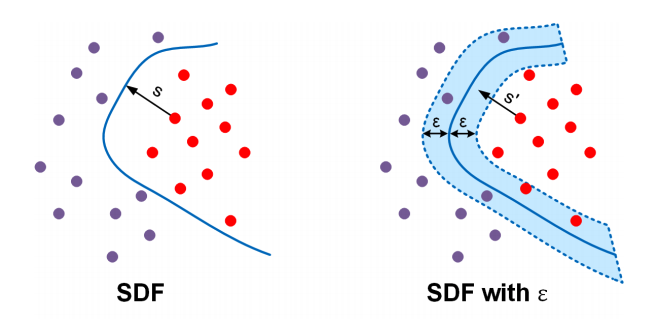

Curriculum DeepSDF

|

|

|

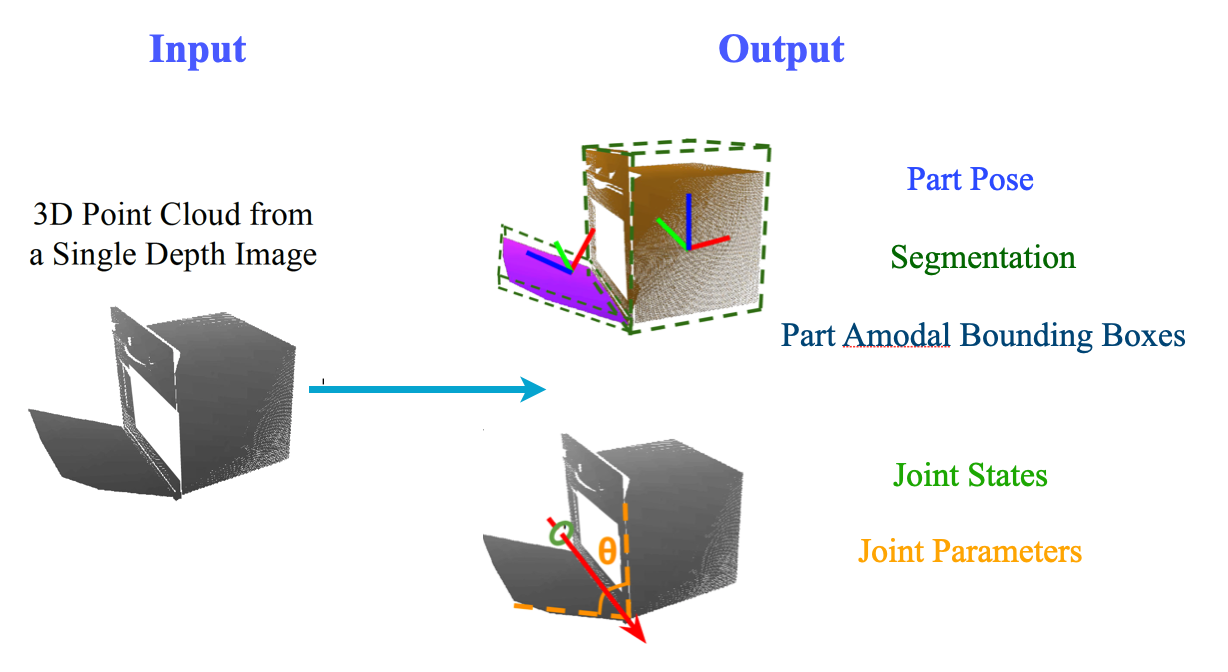

Category-level Articulated Object Pose Estimation

|

|

|

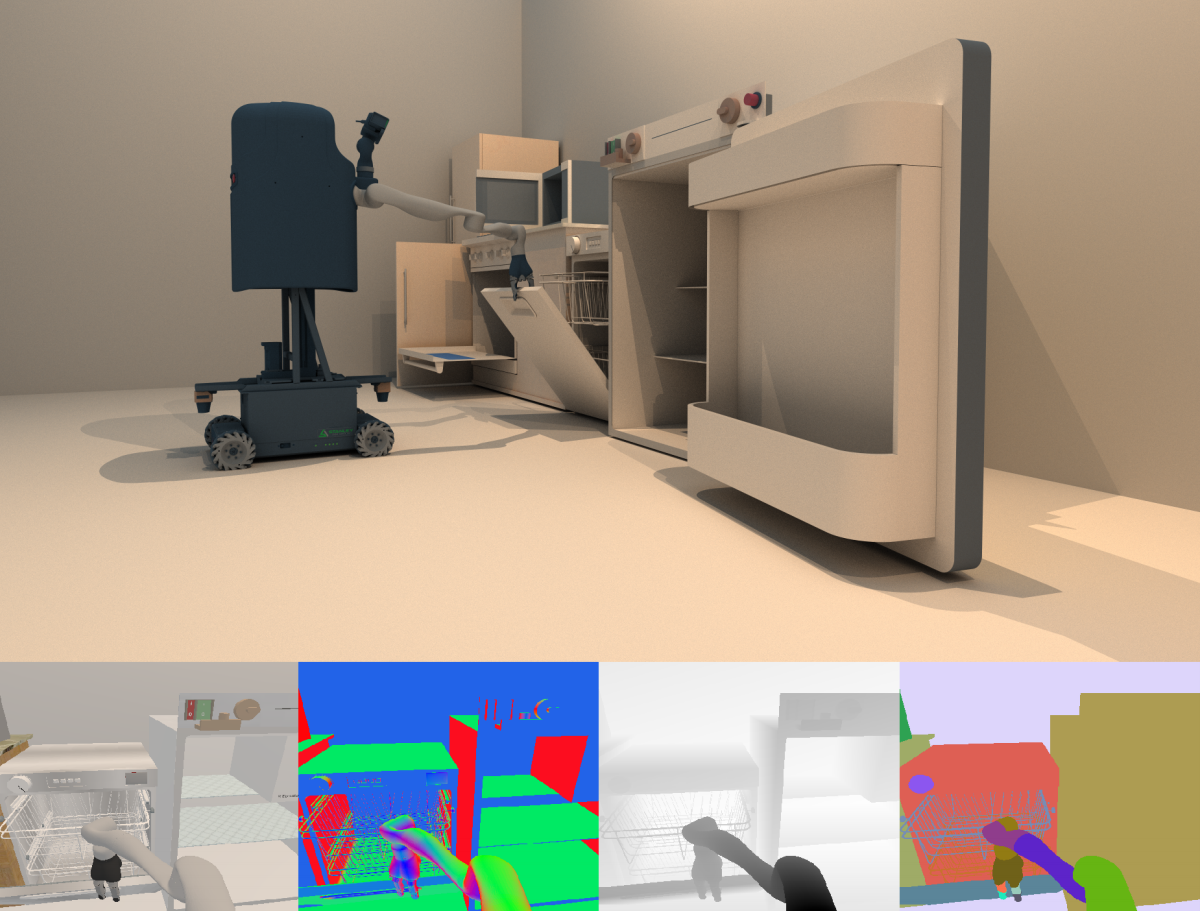

SAPIEN: A SimulAted Part-based Interactive ENvironment

|

|

|

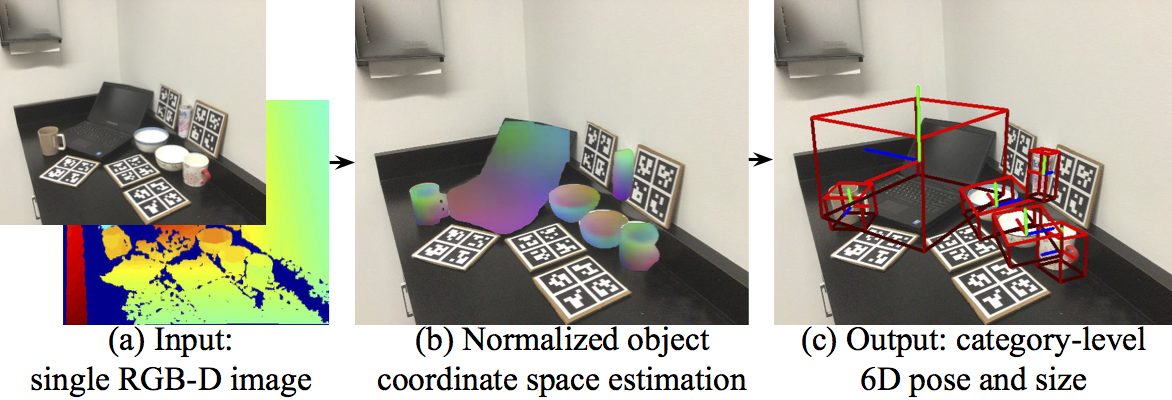

Normalized Object Coordinate Space for Category-Level 6D Object Pose and Size Estimation

|

|

|

GSPN: Generative Shape Proposal Network for 3D Instance Segmentation in Point Cloud

|

|

|

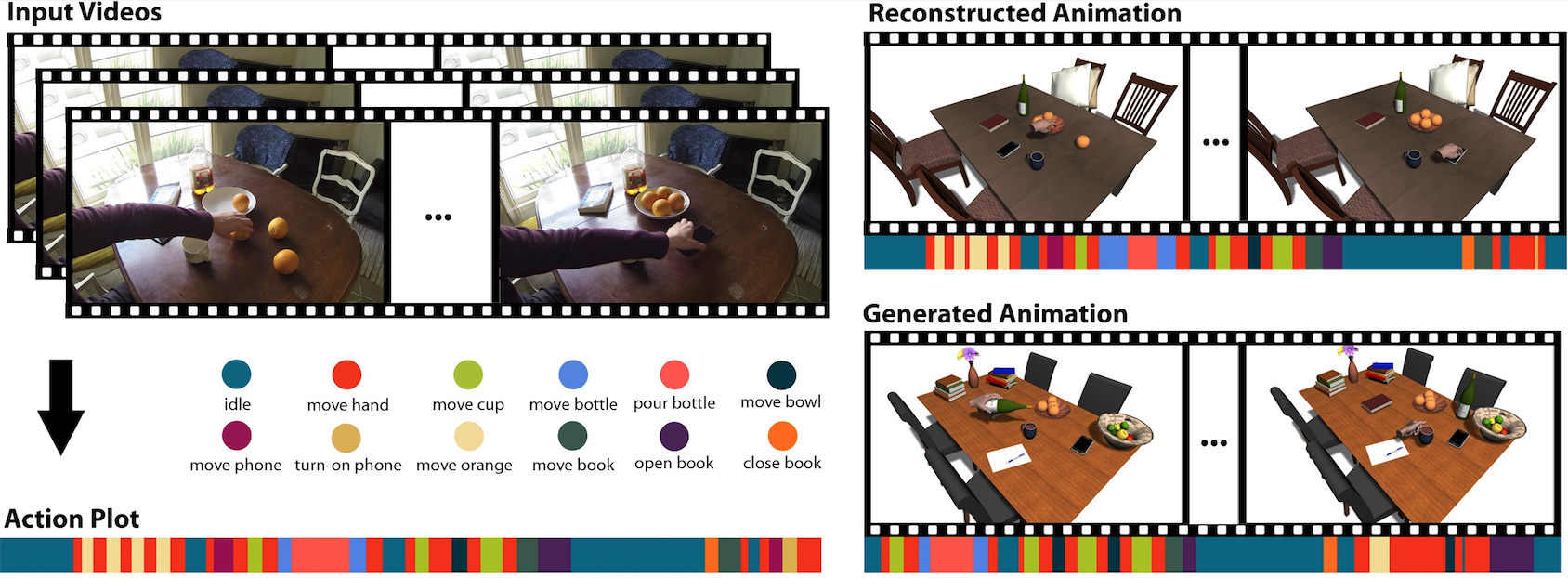

Learning a Generative Model for Multi-Step Human-Object Interactions from Videos |