日程 | CFCS具身计算日听众报名开启

具身(Embodiment)指具有支持感觉和运动的物理身体。与非具身相比,具身拥有物理身体带来的交互能力及主动感知能力。具身主义进一步猜想这种差异是通用智能产生的关键。当下围绕着具身这一话题,计算机科学、人工智能和认知科学等领域都引发了广泛的讨论,在深度学习的推动下形成了视觉、机器人学、自然语言处理、认知等多领域的交叉,迸发出了无限的活力。

CFCS 具身计算日是与具身计算相关的半日研讨会,聚焦于具身智能体的学习和计算,邀请了来自北京大学、清华大学、香港中文大学、卡内基梅隆大学、普林斯顿大学、加州圣迭戈分校、加州圣克鲁兹的10位教授学者进行报告与讨论,话题涵盖具身感知、强化学习、医疗机器人、物体操控和抓取等等,旨在分享具身计算中的研究方向和方法,促进不同领域间的交叉,共同探索未来研究方向。

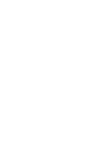

会议日程

互动听众报名

↑↑扫小程序码报名↑↑

前50位通过小程序报名的观众将获得 Zoom 会议信息,可以直接与讲者互动。

报名成功者将于12月10日收到通知邮件。

直播平台

没抢到互动名额?

没关系,本次具身计算日将在中心B站同步直播,直播间会有小编负责收集问题,转到会议现场。

B站

https://live.bilibili.com/22051279

报告信息

以报告时间排序

1. Generalization in Real-Robot Visuo-Motor Control

Abstract

TBD

Biography

Xiaolong Wang is an Assistant Professor in the ECE department at the University of California, San Diego. He is affiliated with the CSE department, Center for Visual Computing, Contextual Robotics Institute, and the TILOS NSF AI Institute. He received his Ph.D. in Robotics at Carnegie Mellon University. His postdoctoral training was at the University of California, Berkeley. His research focuses on the intersection between computer vision and robotics. He is particularly interested in learning visual representation from videos in a self-supervised manner and uses this representation to guide robots to learn. Xiaolong is the Area Chair of CVPR, ICCV, ECCV, and ICLR, and co-chair for IROS and ICRA. He is the recipient of Facebook Fellowship, Nvidia Fellowship, and Sony Research Award.

2. Modeling the 3D Physical World for Embodied Intelligence

Abstract

Many prospects of industry and academia, such as metaverse and autonomy (vehicles and robots), are based on the ability to model the 3D physical world for interaction. Embodied AI is a rising paradigm of AI that targets enabling agents to interact with the physical world. Embodied agents can acquire a large amount of data through interaction with the physical world, which makes it possible for agents to close the perception-cognition-action loop and learn continuously from the world to revise its internal model. In this talk, I will present the work of my group to build an eco-system on embodied AI. The series of work unifies efforts from building a virtual space for interaction data collection to proposing effective closed-loop learning algorithms. We will also discuss the challenges and opportunities in this area.

Biography

Hao Su is an Assistant Professor of Computer Science at the University of California, San Diego. He is the Director of the Qualcomm Embodied AI Lab at UCSD, a founding member of the Data Science Institute, and a member of the Center for Visual Computing and the Contextural Robotics Institute. He works on algorithms to model, understand, and interact with the physical world. His interests span computer vision, machine learning, computer graphics, and robotics -- all areas in which he has published and lectured extensively.

Hao Su obtained his Ph.D. from Stanford in 2018. At Stanford and UCSD he developed widely used datasets and softwares such as ImageNet, ShapeNet, PointNet, PartNet, SAPIEN, and more recently, he pushes the development of ManiSkill. He also developed new courses to promote machine learning methods for 3D geometry and embodied AI. He served as the Area Chair or Associate Editor for top conferences and journals in computer vision (ICCV/ECCV/CVPR), computer graphics (SIGGRAPH/ToG), robotics (IROS/ICRA), and machine learning (ICLR).

3. Trustworthy Reinforcement Learning for AI Deployment

Abstract

Withitsvastpotentialtotacklesomeoftheworld's most pressing problems, reinforcement learning (RL) is applied to transportation, manufacturing, security, and healthcare. As RL has started to shift towards deployment at a large scale, its rapid development is coupled with as much risk as benefits. Before consumers embrace RL-empowered services, researchers are tasked with proving their trustworthiness. In this talk, I will overview trustworthy reinforcement learning in three aspects: robustness, safety, and generalization. I will introduce taxonomies, definitions, methodologies, and popular benchmarks in each category. I will also share the lessons we learned and my outlook for future research directions.

Biography

Ding Zhao is currently an assistant professor in the Department of Mechanical Engineering at Carnegie Mellon University, with affiliations at the Computer Science Department, Robotics Institute, and CyLab on Security and Privacy. Directing the CMU Safe AI Lab, his research focuses on the theoretical and practical aspects of safely deploying AI to safety-critical applications, including self-driving, assistant robots, healthcare diagnosis, and cybersecurity. He is the recipient of the National Science Foundation CAREER Award, CMU George Tallman Ladd Research Award, MIT Technology Review 35 under 35 Award in China, Ford University Collaboration Award, Carnegie-Bosch Research Award, Struminger Teaching Award, and many industrial fellowship awards. He worked with leading industrial partners, including Google, Apple, Ford, Uber, IBM, Adobe, Bosch, Toyota, and Rolls-Royce. He is a visiting researcher in the Robotics Team at Google Brain.

4. When Is Partially Observable Reinforcement Learning Not Scary?

Abstract

Partially observability is ubiquitous in applications of Reinforcement Learning (RL), in which agents learn to make a sequence of decisions despite lacking complete information about the latent states of the controlled system. Partially observable RL is notoriously difficult in theory---well-known information-theoretic results show that learning partially observable Markov decision processes (POMDPs) requires an exponential number of samples in the worst case. Yet, this does not rule out the possible existence of interesting subclasses of POMDPs, which include a large set of partial observable applications in practice while being tractable.

In this talk we identify a rich family of tractable POMDPs, which we call weakly revealing POMDPs. This family rules out the pathological instances of POMDPs where observations are uninformative to a degree that makes learning hard. We prove that for weakly revealing POMDPs, a simple algorithm combining optimism and Maximum Likelihood Estimation (MLE) is sufficient to guarantee a polynomial sample complexity. We will also show how these frameworks and techniques further lead to sample efficient learning for RL with function approximation or multiagent RL under partial observability. To the best of our knowledge, this gives the first line of provably sample-efficient results for learning from interactions in partially observable RLs. This is based on joint works with Qinghua Liu, Alan Chung, Sham Kakade, Akshay Krishnamurthy, Praneeth Netrapalli, and Csaba Szepesvari.

Biography

Chi Jin is an assistant professor at the Electrical and Computer Engineering department of Princeton University. He obtained his PhD degree in Computer Science at University of California, Berkeley, advised by Michael I. Jordan. His research mainly focuses on theoretical machine learning, with special emphasis on nonconvex optimization and reinforcement learning. His representative work includes proving noisy gradient descent escape saddle points efficiently and proving the efficiency of Q-learning and least-squares value iteration when combined with optimism in reinforcement learning.

5. Embodied Intelligence for Surgical Robots: A Domain-specific Simulator and Pilot Studies

Abstract

With rapid advancement in medicine and engineering technologies, the autonomy of robotic surgery has been increasingly desired to revolutionize healthcare, given its promises to perform procedures with more precision while less incision. Embodied intelligence, together with its sensorimotor activity, smart knowledge extraction, and automation, has remarkably demonstrated its potential in automating robotic tasks and its capacity to continuously improve. In this talk, I will present our recent work on surgical embodied intelligence, focusing on 1) a domain-specific, task-rich, realistic, and user-friendly simulator to facilitate both surgical roboticists and AI researchers, 2) the challenge of applying existing learning-based methods for automating developed tasks and ways to overcome these challenges, 3) the use of human interactions in surgical embodied AI systems and interesting open questions remaining to be discussed.

Biography

窦琪,香港中文大学计算机科学与工程学系助理教授,同时为港中文天石机器人研究所和香港医疗机械人创新技术中心的成员。研究方向为医学图像分析和机器人手术智能化。团队在医学影像/人工智能/机器人相关领域顶级会议和期刊发表论文百余篇,谷歌总引用14000多次,曾获 ICRA 医疗机器人最佳论文奖,MICCAI 最佳论文奖,MedIA 最佳论文奖等。担任领域国际会议 MIDL 2021, MIDL 2022, MICCAI 2022 和 IPCAI 2023 Program Co-Chair,及多个国际期刊的副主编。

6. Towards Unified Robotics Manipulation

Abstract

让不同型号的机器人操控日常生活中的各类物体,是具身智能的研究重点之一。其存在交互任务多、物体形状和结构多样性大的挑战,而我们一直在探索一种统一的操控策略方法,让一套方法来解决上述的问题。因此,我们研究以物体为中心的交互策略表示,把交互问题抽象为针对物体的感知问题。我们通过自监督学习方法,对不同交互技能,输出合理的交互方法,包括位置和动作轨迹。这种方法解耦了机器人和物体的关系,不同型号的机器人都可根据同样的动作轨迹完成同样的任务,还能实现跨物体类别的泛化。

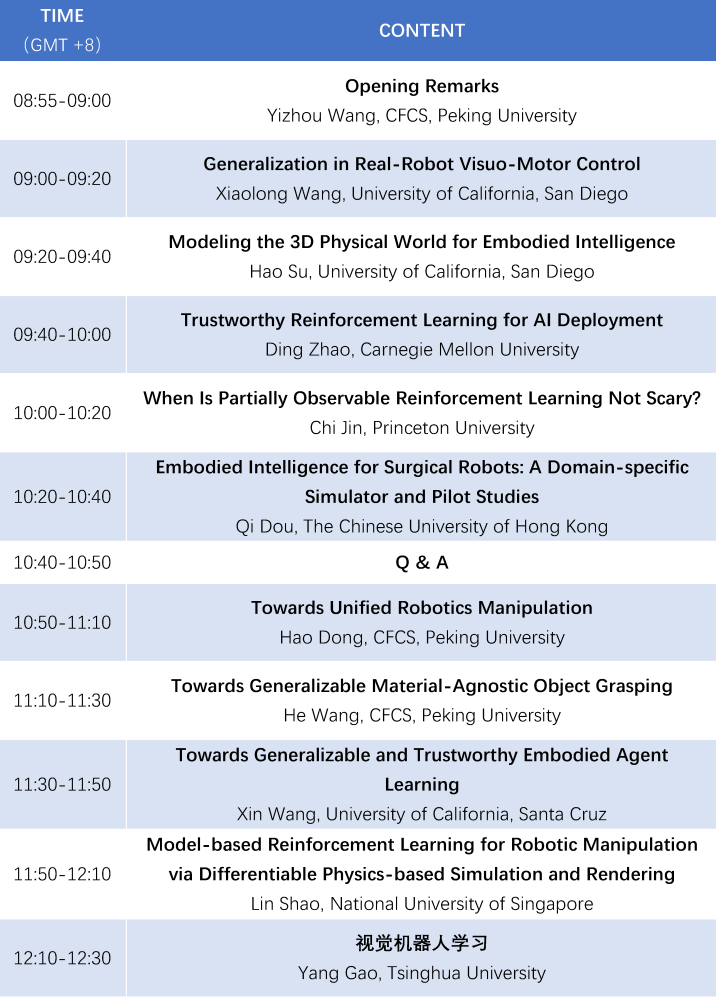

Biography

董豪,北京大学计算机学院助理教授,博士生导师,2019年于帝国理工获得博士学位。主要研究方向为计算机视觉、机器人和具身智能,当前研究工作围绕智能机器人的自主决策与泛化交互 。担任 CVPR 2023领域主席、AAAI 2023高级程序委员、中国科技核心期刊 Machine Intelligence Research 副编委等,在 NeurIPS, ICLR, ICCV, ECCV, IROS 等顶级国际会议和期刊中发表论文30余篇,引用3000余次。为《Deep Reinforcement Learning:Fundamentals, Research and Applications》作者。获得 ACM MM 最佳开源软件奖,新一代人工智能产业技术创新战略联盟 OpenI 启智社区优秀开源项目、Springer Nature 中国作者高影响力研究精选、电子工业出版社优秀作者奖等。

7. Towards Generalizable Material-Agnostic Object Grasping

Abstract

Object grasping is a crucial technology for many embodied intelligent systems. Recent years have witnessed great progress in developing depth-based grasping methods. These methods can reliably detect 6-DoF grasps from cluttered table-top scenes mainly composed of objects with diffuse material. However, grasping transparent and specular objects, which are ubiquitous in our daily life and need to be handled by robotic systems, is still very challenging. Depth sensors struggle to sense those transparent and specular objects and usually generate wrong or even missing depths, thus further leading to the failure of grasping in those methods. In this talk, I will introduce two recent works on learning material-agnostic 6 DoF grasp detection that can work on scenes with transparent and highly specular objects: 1) a ECCV 2022 work, DREDS, that leverages domain randomization and depth sensor simulation to generate large-scale simulated depth dataset, enabling depth restoration and transparent object grasping; 2) GraspNeRF that leverages a generalizable NeRF to build implicit scene representation and enable end-to-end grasp detection with only sparse RGB inputs.

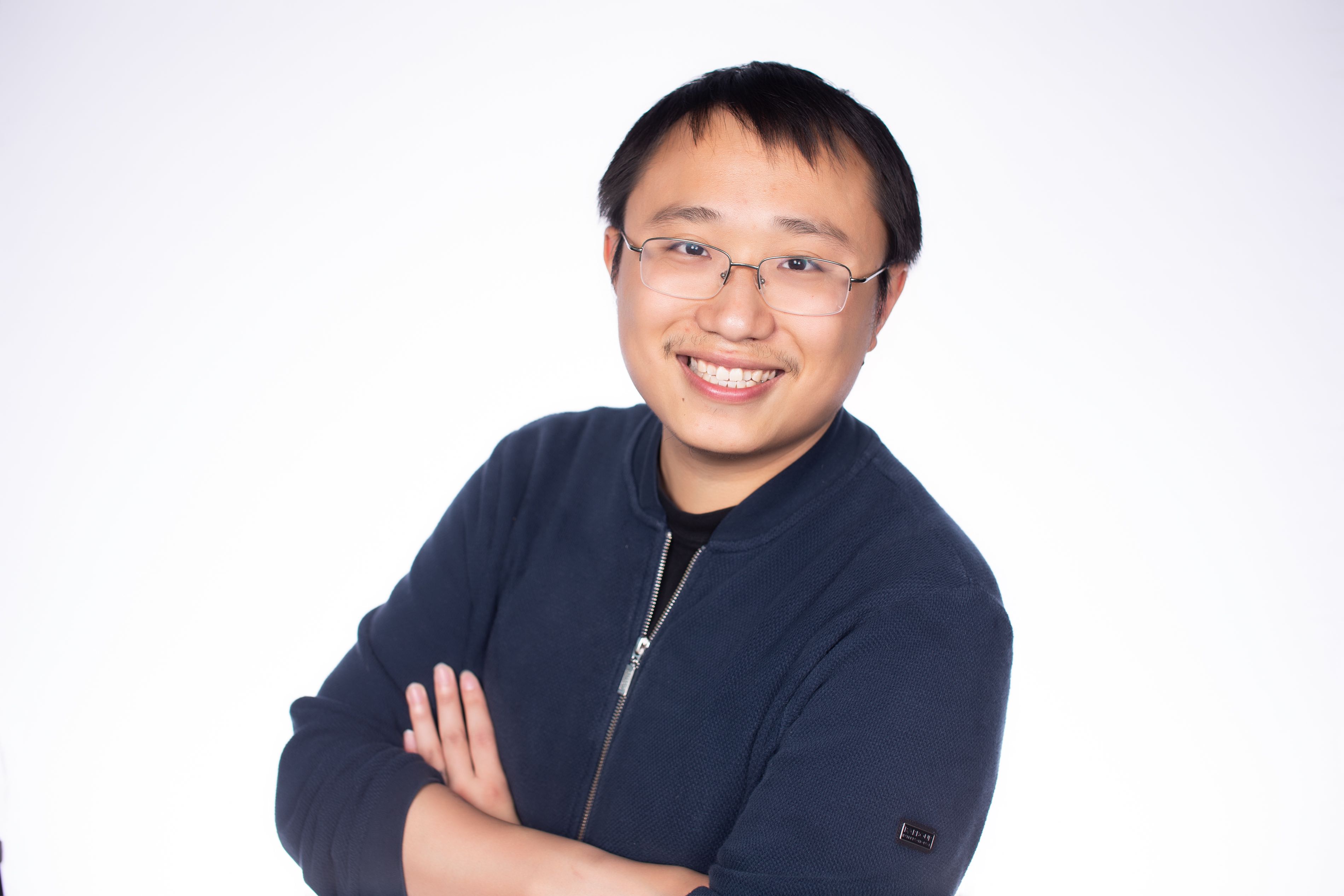

Biography

He Wang is a tenure-track assistant professor in the Center on Frontiers of Computing Studies (CFCS), School of Computer Science at Peking University, where he founds and leads Embodied Perception and InteraCtion (EPIC) Lab. His research interests span 3D vision, robotics, and machine learning, with a special focus on embodied AI. His research objective is to endow robots working in complex real-world scenes with generalizable 3D vision and interaction policies in a scalable way. He has published more than 20 papers on top conferences and journals of computer vision, robotics, and learning (CVPR/ICCV/ECCV/TRO/RAL/NeurIPS/AAAI). His works have received 2022 World Artificial Intelligence Conference Youth Outstanding Paper (WAICYOP) Award, Eurographics 2019 best paper honorable mention, and the champion of "no external annotation" track of ManiSkill Challenge 2021. He serves as an area chair in CVPR 2022 and WACV 2022. Prior to joining Peking University, he received his Ph.D. degree from Stanford University in 2021 under the advisory of Prof. Leonidas J. Guibas and his Bachelor's degree in 2014 from Tsinghua University.

8. Towards Generalizable and Trustworthy Embodied Agent Learning

Abstract

(generated by ChatGPT): Recent advances in artificial intelligence (AI) and machine learning have enabled the development of embodied agents that can interact with the world in complex ways. However, these agents often struggle to generalize their learning to new environments and situations, and their behavior is often unpredictable and untrustworthy. In this talk, we will discuss recent research that aims to address these challenges and enable embodied agents to learn in a more generalizable and trustworthy manner. We will explore key technologies such as neuro-symbolic reasoning, federated learning, and prompt learning, and we will show how they can be used to improve the performance and reliability of embodied agents. We will also discuss some of the ethical implications of these developments and the importance of building trustworthy AI systems. By the end of the talk, attendees will have a better understanding of the current state of the art in embodied agent learning and the challenges and opportunities that lie ahead.

Biography

Xin (Eric) Wang is an Assistant Professor of Computer Science and Engineering at UC Santa Cruz. His research interests include Natural Language Processing, Computer Vision, and Machine Learning, with a focus on building embodied AI agents that can communicate with humans through natural language to perform real-world multimodal tasks.

Xin has served as an Area Chair for conferences such as ACL, NAACL, EMNLP, and ICLR, as well as a Senior Program Committee for AAAI and IJCAI. He also organized workshops and tutorials at conferences such as ACL, NAACL, CVPR, and ICCV.

Xin has received several awards and recognitions for his work, including the CVPR Best Student Paper Award (2019) and a Google Research Faculty Award (2022), as well as three Amazon Alexa Prize Awards (SimBot, TaskBot, and SocialBot, 2022-2023).

9. Model-based Reinforcement Learning for Robotic Manipulation via Differentiable Physics-based Simulation and Rendering

Abstract

Model-based reinforcement learning (MBRL) is widely recognized with the potential to be significantly more sample efficient than model-free reinforcement learning. How an accurate model can be developed automatically and efficiently from raw sensory inputs (such as images), especially for complex environments and tasks, is a challenging problem that hinders the broad application of MBRL in the real world. Recent developments in differentiable physics-based simulation and rendering provide a potential direction.

In this talk, I will introduce a learning framework called SAGCI that leverages differentiable physics simulation to model the environment. It takes raw point clouds as inputs and produces an initial model represented as a Unified Robot Description Format (URDF) file, which is loaded into the simulation. The robot then utilizes interactive perception to online verify and modify the model. We propose a model-based learning algorithm combining object-centric and robot-centric stages to produce policies to accomplish manipulation tasks. Next, I will present a sensing-aware model-based reinforcement learning system called SAM-RL, combining differentiable physics simulation and rendering. SAM-RL automatically updates the model by comparing the rendered images with real raw images and produces the policy efficiently. With the sensing-aware learning pipeline, SAM-RL allows a robot to select an informative viewpoint to monitor the task process. We apply our framework to real-world experiments for accomplishing three manipulation tasks: robotic assembly, tool manipulation, and deformable object manipulation. I will close this talk by discussing the lessons learned and interesting open questions that remain.

Biography

Lin Shao is an Assistant Professor in the Department of Computer Science at the National University of Singapore (NUS), School of Computing. His research interests lie at the intersection of Robotics and Artificial Intelligence. His long-term goal is to build general-purpose robotic systems that intelligently perform a diverse range of tasks in a large variety of environments in the physical world. Specifically, his group is interested in developing algorithms and systems to provide robots with the abilities of perception and manipulation. He is a co-chair of the Technical Committee on Robot Learning in the IEEE Robotics and Automation Society. Previously, he received his Ph.D. at Stanford University and his B.S. at Nanjing University.

10. 视觉机器人学习

Abstract

本报告从感知和行为角度的最小可行机器人的概念出发,阐述基于视觉的机器人学习问题。本报告从该问题的两方面主要的挑战,即机器人策略学习算法、视觉表征模型的两方面,探讨相关领域的最新进展。具体来讲,在策略学习方面,真实世界的策略学习面临两大主要难点:算法的样本效率低下以及自动化定义奖励函数困难。我们提出相应的解决方案(EfficientZero 和 EfficientImitate)解决了部分困难。在视觉表征方面,我们将尝试回答“什么样的视觉表征对机器人是好的”这个问题。我们将系统地回顾该领域最近一年的令人激动的进展,并且指出已有工作的不一致性问题。同时,我们也会探讨在非监督物体跟踪和非监督多物体表征学习我们的一些近期工作。

Biography

高阳是清华大学交叉信息研究院的助理教授,主要研究计算机视觉与机器人学。此前,他在美国加州大学伯克利分校获得博士学位,师从 Trevor Darrell 教授。他还在加州伯克利大学与 Pieter Abbeel 等人合作完成了博士后工作。在此之前,高阳从清华大学计算机系毕业,与朱军教授在贝叶斯推理方面开展了研究工作。他在2011-2012年在谷歌研究院进行了自然语言处理相关的研究工作、2016年在谷歌自动驾驶部门 Waymo 的相机感知团队工作,在2018年与 Vladlen Koltun 博士在英特尔研究院在端到端自动驾驶方面进行了研究工作。高阳在人工智能顶级会议 NeurIPS, ICML, CVPR, ECCV, ICLR 等发表过多篇学术论文,谷歌学术引用量超过2000次。

共同发起人

以姓氏首字母排序

董豪

北京大学前沿计算研究中心助理教授

研究方向:智能机器人,计算机视觉

王鹤

北京大学前沿计算研究中心助理教授

研究方向:具身智能,三维视觉,机器人学

关于中心

北京大学前沿计算研究中心成立于2017年12月,是北京大学新体制科研机构,由图灵奖获得者、中国政府“友谊奖”获得者、中国科学院外籍院士、北京大学访问讲席教授 John Hopcroft 和中国工程院院士、北京大学博雅讲席教授高文担任联合主任。

中心立足国际计算机学科前沿,在计算理论、前沿计算方法,以及计算与机器人、经济、艺术和体育等多个领域的交叉方向展开前沿探索,创立具有国际一流影响力的计算科学研究中心;形成跨领域、交叉融合的计算应用支撑中心。

中心创建宽松自由的国际化学术环境,助力青年教师成长为计算机领域世界一流的学者;并以“图灵人才培养计划”为代表,建立国际先进的计算科学及相关交叉学科人才培养机制,为国家新时代科技发展和产业革新培养引领未来的卓越人才。