[NeurIPS 2023] GenPose: Generative Category-level Object Pose Estimation via Diffusion Models

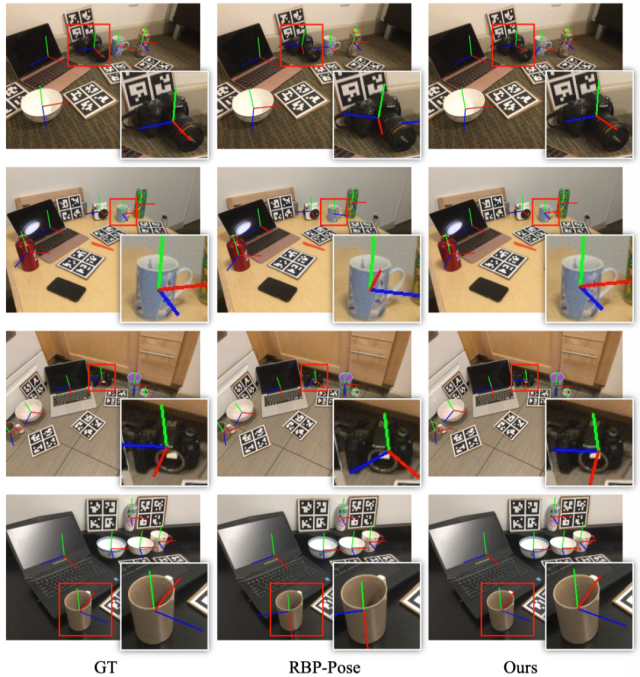

Object pose estimation plays a vital role in embodied AI and computer vision, enabling intelligent agents to comprehend and interact with their surroundings. Despite the practicality of category-level pose estimation, current approaches encounter challenges with partially observed point clouds, known as the multihypothesis issue. In this study, we propose a novel solution by reframing categorylevel object pose estimation as conditional generative modeling, departing from traditional point-to-point regression. Leveraging score-based diffusion models, we estimate object poses by sampling candidates from the diffusion model and aggregating them through a two-step process: filtering out outliers via likelihood estimation and subsequently mean-pooling the remaining candidates. To avoid the costly integration process when estimating the likelihood, we introduce an alternative method that trains an energy-based model from the original score-based model, enabling end-to-end likelihood estimation. Our approach achieves state-of-the-art performance on the REAL275 dataset, surpassing 50% and 60% on strict 5d2cm and 5d5cm metrics, respectively. Furthermore, our method demonstrates strong generalizability to novel categories sharing similar symmetric properties without fine-tuning and can readily adapt to object pose tracking tasks, yielding comparable results to the current state-of-the-art baselines.

Paper: https://arxiv.org/abs/2306.10531

Video:https://www.bilibili.com/video/BV1Eg4y1Z7x2/