What If The Majority Is Wrong?

Introduction:

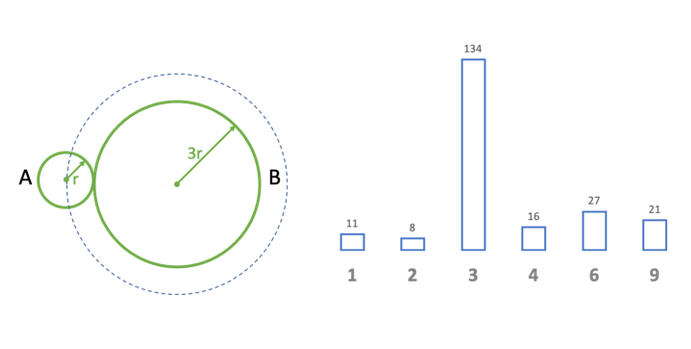

This post introduces our recent work, "Eliciting Thinking Hierarchy without a Prior" [Kong Li Zhang Huang Wu, NeurIPS 2022]. Let's start by considering a math problem. The radius of Circle A is 1/3 the radius of Circle B. Circle A rolls around Circle B one trip back to its starting point. How many times will Circle A revolve in total?

If your answer is "3", then we have one good news and one bad news for you. The good news is that you have the same answer as the majority. The bad news is that the majority is wrong.

The above scenario is not rare. With prior knowledge like the expertise level of each individual respondent, we may know whether the majority is wrong. However, sometimes it's quite difficult to obtain prior knowledge, especially in new fields.

The central question is that, without any prior, we don't know whether to follow "the wisdom of crowds" or believe that "truth always rests with the minority".

Taking the circle problem as an example.

We have collected answers "1 (11 people), 2 (8 people), 3 (134 people), 4 (16 people), 6 (27 people), 9 (21 people)".

Collected answers for the circle problem

As we mentioned before, the majority is wrong. Thus, we need additional information to not rank the answers only based on their popularity. Prelec, Seung, McCoy (2017) propose an innovative approach, surprisingly popular. They prepare multiple options, ask the respondents to pick one option, and more importantly, predict the distribution over other people's choices. They use the predictions to construct a prior distribution over the choices, and then select the choice that is the most popular compared to the prior. Additionally, Rothschild and Wolfers (2011) use voters' expectations of other voters to make a better prediction for election results.

Similarly, we ask respondents:

What's your answer?

What do you think other people will answer?

Unlike previous work, the above questions are open-response. For example, one respondent can answer "3", and "I think other people will answer 6". Thus, the requester does not need prior knowledge to design the options. The respondents do not need to report a whole distribution over all options.

To rank the answers, we use the hypothesis from Kong and Schoenebeck (2018): More sophisticated agents know the mind of less sophisticated agents, but not vice versa.

This is very similar to the level k theory [Stahl and Wilson, 1995] and cognitive hierarchy theory [Camerer, Ho, and Chong, 2004], which assume that people have different levels of sophistication and more sophisticated players can best respond to less sophisticated players in games.

We focus on the problem-solving setting and propose a thinking hierarchy framework which

1. learns the thinking hierarchy without any prior;

2. ranks the answers such that the higher-ranking answers, which may not be supported by the majority, are from more sophisticated people.

Method:

Answer-prediction matrix:

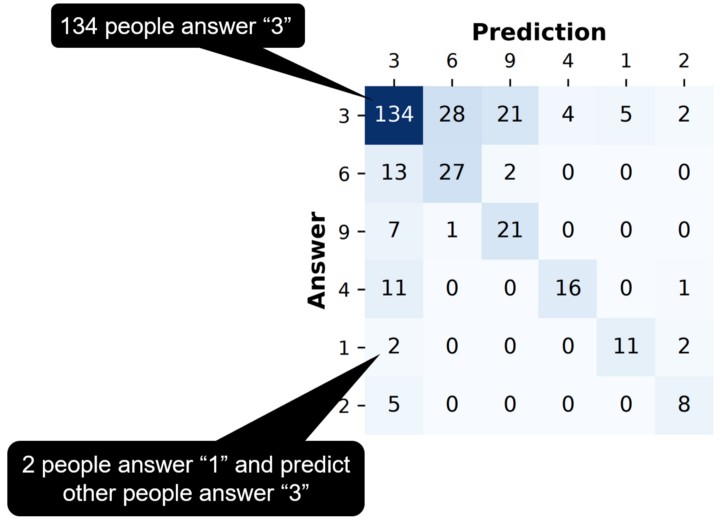

After collecting respondents' answers and predictions, we construct a matrix called the answer-prediction matrix:

1. Non-diagonal elements: The element at (x,y) indicates how many respondents answer the xth answer and predict the yth answer. For example, the fifth row and first column in the following matrix represent that two respondents answer "1" and think that other people will answer "3".

2. Diagonal elements: The element at (x,x) indicates how many respondents answer the xth answer. For example, the first row and first column in the following matrix represent that 134 respondents answer "3".

Answer-prediction matrix

How to rank the answers?

We rearrange the rows & columns to maximize the sum of squares of the elements in the upper-triangular area. The intuition is that we hope the higher-rank answer's supporters can predict lower-rank answers, but not vice versa. Thus, we want to rank the answers such that approximately only the upper-triangular area has non-zero elements. See the paper for more details on the theoretical justification for this algorithm.

The following matrix is the ranked answer-prediction matrix for the circle problem. The correct answer "4" is on the top, though it is only supported by 16 respondents. (Interested readers are referred to this website for explanations for the correct answer.)

Ranked answer-prediction matrix

Studies:

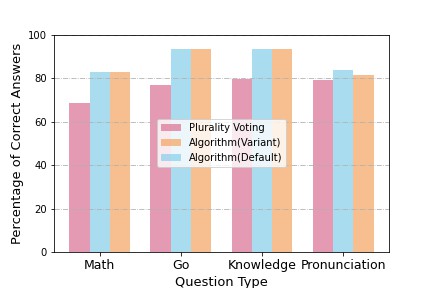

We conduct four studies, study 1 (35 math problems), study 2 (30 Go problems), study 3 (44 general knowledge questions), and study 4 (43 Chinese character pronunciation questions). We compare our approach to the baseline, the plurality voting, regarding the accuracy of the top-ranking answers. Our approach beats the plurality voting in all studies.

We have introduced the default ranking algorithm, see the variant in the paper

In addition to the circle problem, here are more examples of our empirical results.

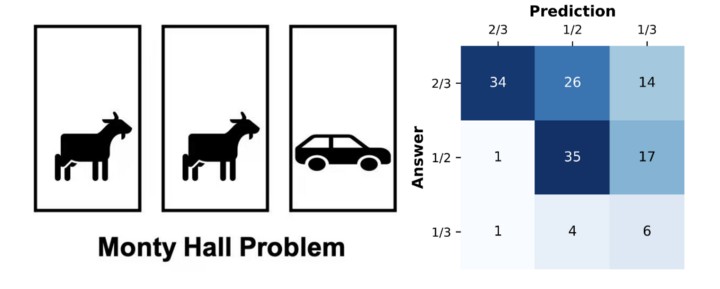

Monty hall problem: You can select one closed door of three. A prize, a car, is behind one of the doors. The other two doors hide goats. After you have made your choice, Monty Hall will open one of the remaining doors and show that it does not contain the prize. He then asks you if you would like to switch your choice to the other unopened door. What is the probability to get the prize if you switch?

The Monty Hall problem's intuitive answer is "1/2" since the choices seem to be equally good. The correct answer is "2/3".

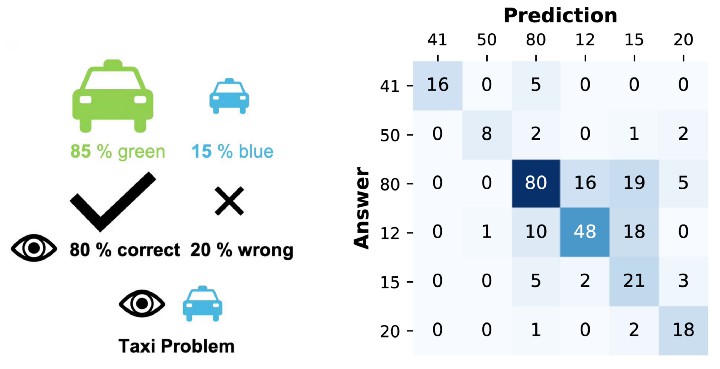

Taxicab problem: 85% of taxis in this city are green, and the others are blue. A witness sees a blue taxi. She is usually correct with a probability of 80%. What is the probability that the taxi saw by the witness is blue?

The Taxicab problem has two pieces of information: a base rate and a witness's testimony. The plurality ignores the base rate and answers "80%".

Boundary river between China and North Korea: What river forms the boundary between North Korea and China?

74 respondents answer "Yalu" while our approach ranks the correct answer "Yalu & Dooman" the highest, though it is only supported by 3 respondents.

Life and death problem: Pick a move for black such that they can be alive.

The plurality answer C2 is an aggressive move for black where black can capture a white stone soon.

In all of these examples, the plurality is incorrect while our approach is correct. The results of other questions are illustrated on this website.

Interesting Insights:

1."Slow thinking" predicts "Fast thinking"

"Thinking, Fast and Slow" propose that people have two systems, a fast and intuitive system, and a slow and logical system. For example, Alice starts to solve the circle problem. When she reads the question, she can run her intuitive system 1 and obtain answer "3". However, when she starts to think carefully, she runs her more careful system 2 to obtain the answer "4". In our studies, some problems are borrowed from "Thinking, Fast and Slow" and our results show that "slow thinking" predicts "fast thinking".

2. People have a very rich thinking hierarchy

The taxicab problem is borrowed from "Thinking, Fast and Slow". Tversky and Kahneman (1974) show that people usually ignore the base rate and report "80 %". The imagined hierarchy can be "41 %->80 %". We obtain a much richer hierarchy——" 41 %->50 %->80 %->12 %->15 %->20 % ".

3. Expert may fail to predict layman, while the middle level can.

In the Taxicab problem, the correct "41 %" supporters successfully predict the common wrong answer "80 %". However, they fail to predict the surprisingly wrong answers "12 %, 20 %", which are in contrast successfully predicted by "80 %" supporters. Moreover, though there are 48 supporters for "12%", none of them predicts "20%". There are only 21 supporters for "15%" and 3 of them predict "20%".

Discussion and Conclusion:

We have asked a class of students at Peking University: why are bar chairs high? The plurality answer is "the bar counter is high" and our top-ranking answer is "better eye contact with people who stand." However, to run our approach, we need to first cluster the answers by hand. Thus, to apply our approach to the general open-response questions, we need to develop a better answer clustering method.

In summary, we propose an empirically validated method to learn the thinking hierarchy without any prior in general problem-solving scenarios. Potentially, our paradigm can be used to make better decisions when we crowd-source opinions in a new field with little prior information. In the future, it's also interesting to compare human's thinking hierarchy and machine's thinking hierarchy.